SVG filters in HTML

In modern browsers there’s lots of functionality that’s rarely used, because it’s either hard to understand and/or hard to use. One such thing is SVG, and especially filters. For use on the web they were too unreliable in the past and most importantly, they required you to use SVG (although you could link your original HTML back in through use of foreignObject). Well, no more.

With Firefox 3.5 we get stable filters support (at least nothing’s missing that I wanted to use) and we can now use them in our HTML through CSS.

But there’s still a problem with the documentation. Try to type a filter name into Google, like “feDisplacementMap” and you get not even 3000 results. And two thirds of those are either bug reports for engines implementing them or pages trying to sum up the spec. The spec itself is also often hard to understand and in desperate need of some clarification and more complex examples.

So I’m going to do my share about it and explain a bit about the before mentioned feDisplacementMap and how to use it to do cool stuff.

Let’s look at the cool demo first

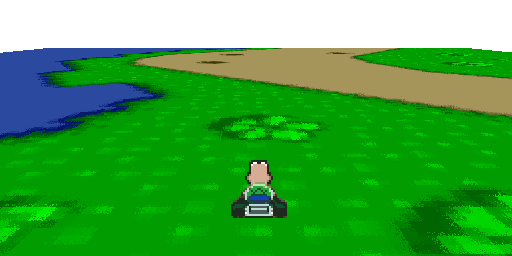

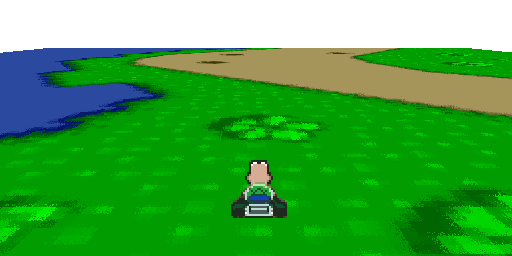

If you just came here because somebody who linked here mentioned Mario Kart, I think I should have the decency to give you a link to the demo straight away. Go here. But be aware that this is not actually a game, just a demo showing what you can do with feDisplacementMap. There’s no colission detection, no enemies, no goals.

All the rendering is done through an SVG filter, except the controls and the initial 2D transformation, which is done with Canvas (however you can also do this with pure SVG as well like I did here, it’s just a bit faster with Canvas).

If you want to know how it’s done and how you can use the same technique to do other cool effects, read on.

What’s fedisplacementMap and why should you care?

feDisplacementMap is an SVG filter for pixel mapping. Basically you provide a source image and a map which pixel goes where and that’s it. And if you have to warp pixels around there’s nothing you can do in a browser that would be faster. It’s also probably the most versatile filter you’ll find in SVG.

What you can do with it

Basically everything where you have to transform different images the same way over and over again. That could be anything, like a lense effect, mirroring, or a perspective deformation.

How it works

The first little thing to look out for is that the displacement filter does not actually allow you to “push” pixels around. If it did that then you’d typically end up with a lot of empty spots because nothing was pushed there and the color that was initially there was pushed away.

Instead, it works the other way around: Each pixel contains information which pixel of the source image it should show. using the distance to the current pixel to specify it. In other words, each pixel of the map contains an instruction like “show the pixel from the source image that’s 12 pixels to the left and 4 pixels up from the current one”, not “move this pixel 12 pixels to the right and 4 pixels down”. It’s a subtle difference, but one you should be aware of.

Turning the raw offsets into a displacement map

This information is encoded into a color in order to save the displacement map as an image. The encoding is actually quite simple. The distance to the source pixels is split into an X distance and a Y distance. That distance is then spread across the color range (0-255) where 0 means a lot (we’ll talk about how much in a second) to the left/up and 255 a lot to the right/down. Then each one is saved to a color channel, for example red for X and green for Y (you can later specify in the filter which channel you used for what).

The actual distance is lateron calculated in the filter by multiplying the color value with a multiplier you specify, so how long the distance to the source pixel actually is depends on what multiplier you use. Set it to 255 and you’ll mostly get what you expect (0 meaning 127 to the left/up and 255 to the right/down).

Things to watch out for

There are mainly two things that can cause you a lot of headaches if you’re not aware of them:

- SVG by default uses a strange colorspace that will lead to unexpected results. Why they did that when they wrote SVG I’ll never understand, sufficient to say that you better set the colorspace to sRGB if you want to retain your sanity.

- The source image isn’t automatically wrapped, so if your displacement map pulls in a pixel that is outside the source image, it will appear transparent.

The good stuff: absolute mapping

The thing that limits feDisplacementMap most is that it can only use relative offsets. There is no way to say that you want the pixel at 10,12 to appear at a certain point, just the one 3 pixels to the left and 2 pixels up… but with a little trickery you can still do it:

The trick is to tile the source image so that every pixel is in the reach of every target pixel. In other words: resize it to 256×256 and tile it across the size of your destination surface. Then all you have to do is substract the current position from each absolute position you have in your mapping and finally put it into 0-255 range (modulo 256).

Adding filters to your HTML

Applying a filter is really easy in Firefox 3.5. Just add a filter CSS rule to any element, like this:

filter:url(#myFilterId);

Specifying the actual filter is a bit more difficult, but still doable. You can either put it into a separate SVG and link it in with

filter:url(mySvgFilters.svg#myFilterId);

or, if you want to add it inline, you have to include the SVG namespace in your HTML declaration and add a SVG element to your body.You’ll have to put “svg:” in front of the SVG elements to tell the browser that it’s not some unknown HTML element, but a SVG element instead.

<html

xmlns="http://www.w3.org/1999/xhtml"

xmlns:svg="http://www.w3.org/2000/svg"

xmlns:xlink="http://www.w3.org/1999/xlink">

<body>

<svg:svg height="0">

</svg:svg>

</body>

</html>

Chose whichever way you want, as long as you’ve got the filter inside a SVG element somewhere, you’ll be fine.

Now, for the actual filter. This will add a filter with sane settings.

<svg:filter

id="myFilterId"

color-interpolation-filters="sRGB"

filterUnits="userSpaceOnUse"

x="0" y="0" width="768" height="512">

</svg:filter>

That’s an awful lot of strange settings I know, but you’ll get the hang of it:

- color-interpolation-filters=”sRGB” makes sure that the filter will use normal RGB colors instead of some other strange format.

- filterUnits=”userSpaceOnUse” tells the filter to use pixels as default unit which usually is a lot easier to understand. You can still use percentages like “100%” but pixels will be the default unit.

- x=”0″ y=”0″ width=”768″ height=”512″ specify the dimensions. Naturally, this should fit your element’s dimensions. Make sure to not forget the x/y declaration, because otherwise the filter will default to a position of -10%,-10%.

- id=”myFilterId” I think this is pretty self-explanatory.

Writing a filter

A filter consists of one or more filter operations, which are basically mini-filters that do simple operations like bluring. A filter combines these operations into one big processing chain.

The basic format for a single operation is always like this:

<svg:feSomeOperation in=”someInput” result=”someOutput” x=”0″ y=”0″ width=”256″ height=”256″ />

If an operation takes an input, you can specify an “in” attribute (some operations also need a second input, in which case you also specify an “in2” attribute). If you don’t specify it, the operation will simply use the output from the previous one. There are also some special inputs like SourceGraphic, which contains an image of the element to which the filter was applied.

If you want to reuse the output and not just use it directly afterwards by not specifying an “in” attribute, you have to provide a “result” attribute. Just give it any name and you’ll be able to reuse the output by specifying it as another operation’s “in” attribute.

And lastly you need to specify the rectangle to which the filter is applied.

That’s pretty much it. So let’s look at an actual filter, like the one I used in the Mario Kart demo.

The Perspective Texturing Demo filter

Note that the element that you apply the filter to should be 256 pixels bigger (on each axis) than the output. This is necessary so that the displacement at the very edge can reach “beyond” the souce image.

<svg:feOffset width="256" height="256" />

First we need to crop the image. We only want the top-left 256×256 image. feOffset filters are actually meant to move the image around, but since we didn’t specify any offsets via the “dx”/”dy” attributes, it will just crop the image to the given dimensions.

<svg:feTile width="100%" height="100%" result="source" />

Now we create an image the size of our element which is filled with the output from our previous cropping operation. The next operation will deal with the actual displacement map, so we’ll have to save the output as “source”.

<svg:feImage x="128" y="128" width="512" height="256"

xlink:href="fdisplacement512.png" result ="displacement"/>

Then we load the actual displacement map. Since the displacement can go 128 pixels to the top/left and 128 pixels to the right/bottom, this should be 256 pixels smaller than our element and placed at 128,128. That way no displacement will reach outside of the source image.

<svg:feDisplacementMap x="128" y="128" width="512" height="256"

color-interpolation-filters="sRGB" in="source" in2="displacement"

scale="255" xChannelSelector="R" yChannelSelector="G" result ="out" />

This is the actual displacement. Nothing special here, except that we set the scale to 255 to get a mapping where a color difference of 1 actual means a one pixel shift.

<svg:feComposite in2="displacement" operator="in" />

The composite filter is used to apply the alpha map of the displacement map to the final image.

<svg:feOffset x="0" y="0" width="512" height="256" dx="-128" dy="-128" />

Since we placed our displacement map at 128,128 we have to move it back to 0,0

How to create the an absolute map with your favourite image editing application

Step one: Creating the neutral image.

The neutral image simply maps each pixel to the same one in the source image. It’s want you always start with and it never changes.

You can simply use this image, but if you want to create it yourself (which is a good idea if your image editor supports more than 8bit per channel, since you can use dithering to create a bilinear-filtering like effect), here’s how to do it.

Just fire up your favorite photo editor and create two grayscale images with the dimensions that you want the output to be: Fill both with a gradient from black to white. One vertically, one horizontally. Also create a solid black image with the same dimensions.

Then combine them into one color image with each grayscale image being one color. For example you could make the horizontal one red and the vertical one green. If you now check the colors and positions in the image they should match. The pixel at x:10%,y:20% should have the color red:10%,green:20%,blue:0%.

Step two: Deforming the image.

You can use any deformation filter you want. Obviously, for the demo I used the perspective deformation tool, but you can use anything that doesn’t alter the color of the image, like lens, wave, barrel deformations.

Step three: Changing the absolute source values to relative values.

That one is a little more complicated. Since feDisplacementMap doesn’t really allow you to map a source pixel directly to a target pixel we have to subtract the position of the pixel to convert the absolute position we have so far into a relative position. So basically, you have to load the image we created into something that can run a bit of code on each pixel and run something like the following code on each (you can just save this script and exchange the source image path for yours):

offsetInRgbaArray=(currentY*width+currentX)*4;

absoluteSourceX=mapImage[offsetInRgbaArray+0];

absoluteSourceY=mapImage[offsetInRgbaArray+1];

sourceAlpha=mapImage[offsetInRgbaArray+3];

if(sourceAlpha>254){

mapImage[offsetInRgbaArray+0]=(absoluteSourceX-currentX+16384)%256;

mapImage[offsetInRgbaArray+1]=(absoluteSourceY-currentY+16384)%256;

mapImage[offsetInRgbaArray+2]=0; mapImage[offsetInRgbaArray+3]=255;

}

And that’s it. Try every transformation you can think of, like a lens deformation to create a globe that you can travel.